Reporting on tech with Kelsey Piper

Kelsey Piper, my favorite tech journalist by a country mile, joined me to share our perspectives on tech journalism. We also discussed some of her recent reporting, and the social role of equity in the tech industry.

[Patrick notes: I add commentary to transcripts, set out from the rest of the text in this fashion.

A disclaimer at the top: it is extremely relevant to this topic that I once worked in a tech company PR department. Neither Stripe, nor anyone else I've been associated with, necessarily endorses what I say in my own spaces.]

[Patrick notes: You're going to fairly frequently observe that I retreat from the object level, discussing particular practices at OpenAI, to the meta level, how equity works in the tech industry. Partly this is because I have an enormous regard for OpenAI and have friends there. Partly this is because the general treatment of workers and equity in tech is far more important than any particular set of decisions at any company. And partly it is because Silicon Valley is a small place, and in that small place, I am conflicted up the wazoo.]

Sponsor: This podcast is sponsored by Check, the leading payroll infrastructure provider and pioneer of embedded payroll. Check makes it easy for any SaaS platform to build a payroll business, and already powers 60+ popular platforms. Head to checkhq.com/complex and tell them patio11 sent you.

–

Timestamps:

(00:00) Intro

(00:28) Kelsey Piper's journey into tech journalism

(01:34) Early reporting

(03:16) How Kelsey covers OpenAI

(05:27) Understanding equity in the tech industry

(11:29) Tender offers and employee equity

(20:00) Dangerous Professional: employee edition

(28:46) The frosty relationship between tech and media

(35:44) Editorial policies and tech reporting

(37:28) Media relations in the modern tech industry

(38:35) Historical media practices and PR strategies

(40:48) Challenges in modern journalism

(44:48) VaccinateCA

(56:12) Reflections on Effective Altruism and ethics

(01:03:52) The role of Twitter in modern coordination

(01:05:40) Final thoughts

Transcript

Patrick McKenzie: Hey everyone. I'm Patrick McKenzie, better known as patio11 on the internets, and I'm here with Kelsey Piper.

Kelsey Piper: And I'm Kelsey Piper, I'm a reporter for Vox's Future Perfect. I write about tech, science, people trying to get stuff done.

Patrick McKenzie: So, just for a little bit of the “how you ended up in this position in life” backstory, how do you find yourself working as a journalist in the tech industry?

Kelsey Piper: I had a blog – actually, this is embarrassing – but I had a blog on Tumblr which I'd had since I was a teenager, and where I wrote about my objections to the social justice stuff that was popular on Tumblr at the time that I think became kind of the woke stuff that's popular in a lot of places now – from a kind of consequentialist, effective altruist inspired, “the point of doing stuff is to make the world better, and I don't like any of your activism unless there's a route by which things get better.”

I met Dylan Matthews, who wrote for Vox and was apparently dissatisfied about how much of the stuff he was writing was based on what was in the news and not what mattered the most – and who'd gotten funding for a new section at Vox that was supposed to be, because it was grant funded, less about audience and more about writing about whatever matters the most.

I was like, “that's so cool, I'm really glad you're doing that.” He said, “I like your blog, do you want to do it?” And I was like, yes! Yes, I want to do it!

So I've been at Vox ever since – got to be six, seven years now. Initially we wrote a bunch about farmed animal welfare, which is really important if you think animal suffering is important; about pandemics, which we sounded a little crazy about in 2018 and then sounded really prescient about in 2020 and then now I think we sound like we're beating a dead horse when we talk about pandemics; and about AI, which is another one where, 2018, a lot of people were like, “why do you talk about AI? I don't see the relevance.” Now I think we're actually in a bit of a type of cycle where most people are saying stronger things about AI than I would endorse.

Over that time, I think I got into writing about science and the process of science, and about what it takes to actually build things and get stuff done, because so often when I dug into something, the real story wasn't about whatever was on the label – it was about how we'd learned something important about the world, or how we'd been really wrong about something about the world, or about why people knew something, and had known it for a while, and nothing had actually happened.

COVID was... full of that.

Patrick McKenzie: I think we'll get into some concrete examples later, but we'll do the (I think) standard-in-journalism thing, where you say the most recent stuff first and then go back as we go.

So full disclosure, I used to work in the comms department of a tech company, Stripe, which definitely does not necessarily endorse anything I say in my personal spaces. So we come from slightly different sides of the table in the sometimes synergistic, sometimes antagonistic supporting relationship that is the tech-focused news industry.

Recently you’ve been doing some important reporting on a variety of subjects there. Could you give everyone a rundown on it?

Kelsey Piper: Yeah. So I think I've been covering OpenAI, like I said, going back to 2018 when no one was taking AI seriously. That whole time they've been like, “we're not just a tech company, we're different because of the stakes of what we're trying to do. We're trying to build something that's going to transform the whole world, and we have this hybrid for-profit/non-profit structure so that we can make sure that the results of this huge thing we're going to try and pull off benefit everybody.”

A lot of people in journalism, certainly who I talked to, took this as boilerplate tech company hype. A very common reaction you get is like, “uh huh, the crypto people also say they're going to replace the whole global financial system. You have to say that to raise $80 billion. It doesn't mean anything.”

But I took and still take OpenAI pretty seriously about that, and I think that because of that, I was scrutinizing them even more closely than everybody else in tech – although also what I ended up running into I think would be an objectionable practice at literally any tech company.

When a person left OpenAI:

Every employee that I spoke to who left OpenAI in the last seven or eight years, they would be told that their vested equity in the company – which is a slightly weird kind of equity because of this hybrid for-profit/nonprofit thing, it's called a profit participation unit. It was treated in compensation agreements basically the same as every tech company treats equity. That's how everybody involved was encouraged to think about it – it had vested, and after it vested people assume it's yours.

But when they left, they were told their units would be canceled unless they signed this very restrictive agreement, which included non-disparagement provisions (you can't criticize the company) which was lifelong and which also included a non-disclosure agreement which encompassed the agreement itself.

Not only could you never criticize OpenAI in any way, you could also never tell anybody that you had agreements which might make you unable to criticize OpenAI.

Then it had some non-interference provisions that were pretty broad and overall just quite restrictive. Especially for some people who'd signed it seven years ago when OpenAI was a tiny nonprofit – [it’s] a difficult position to be in seven years down the road where you've agreed to never criticize this company that has changed really dramatically from the one you left.

Patrick McKenzie: A thing I've learned over my years in the tech industry is that even people who notionally have a supermajority of their net worth in equity often don't really understand how it works.

How about we give people the easy-to-understand version for what vesting, etc., means?

Kelsey Piper: Oh, yeah, absolutely.

Patrick McKenzie: Mind if I monologue for a moment?

Kelsey Piper: Go!

Patrick McKenzie: So without reference to any specific company, here’s a very common pattern in the tech industry: you're hired and you're given a promise for compensation in return for the supermajority of your professional efforts going to your new employer for an undefined portion period in the future.

[Patrick notes: An informal term of most employment contracts in tech, and a formal term in many, is that you not do professional things which would cause you to take your eye off the ball. This exists in uneasy tension with a cultural practice in many places of celebrating side projects. As someone who ended up in his quirky position in life largely thanks to being able to convince a Japanese megacorp to tolerate running a commercial side project for a few years, I have some thoughts on that topic. Perhaps surprisingly, they include "100% focus is a lot more valuable than 98% focus and if a company wants to buy the former they should be able to do that, assuming they are extremely upfront about that fact and pay accordingly."]

At the point you're hired, you'll be told what your salary is, and the company is basically always good for that salary to the dollar. Essentially everyone in capitalism understands that after a dollar transfers to your bank account, it does not ever go back to the company. That would be a wildly crazy thing to happen.

[Patrick notes: It is, unfortunately, not the case that every failing startup has made good on its last two weeks of payroll. This is the one monetary lapse that can cause me to think less of a startup founder. Payroll is a sacred trust, which I say not because I'm sponsored by a payroll company but because employees rely on it and also it's a failure mode of employer/employee relationships so well-established that the Bible warns you against it. (James 5:4)

In modern startup practice, when I hear "A company was so #*$(#ed that it missed payroll", my mind goes immediately to "... was it criminal fraud?"]

Patrick McKenzie: Equity is a little bit different in that the intuition for it is that the company would like you to earn your equity over time. The actual legal code that causes this is a little bit complicated – essentially you're given it all up front, but the company typically has a repurchase right for most of it and they lose that right over time.

So you gain true, durable ownership of your equity over time, and that process is called vesting. Without reference to any particular schedule, a very common thing in the tech industry is what's called “four-year vesting with a one year cliff,” which means you durably earn no equity until your 365th day, at which point you immediately get one quarter of it. Then you earn the rest ratably on a monthly basis over the next 36 months.

That isn't the only equity that you'll probably own at the company – there are things like refresher grants, etc. – but the important intuition to come away with is that people in tech have arrived, through this complex social and legal iterated game that's been connected over decades, at understanding vested equity to be pretty close to salary that has arrived in your bank account.

After equity is vested, you expect to keep it and to be able to participate in the upside from that portion forth. So many of the labor issues in tech around equity are about firming out some of the edges around that broadly-held intuition that vested equity belongs to the employee in the same way that their house belongs to the employee.

Kelsey Piper: One example of that (which you probably know more about than me, but): there was a discussion when I was actually a young person working in tech, of companies where the equity took the form of options to purchase the company's stock, and if you left, you had to decide immediately whether to exercise those options. It was often more money than people had, and they didn't have enough time to, say, go to a bank and secure a loan.

There was a lot of discussion about extending the exercise window, which is maybe one example of firming up an employee's access to the equity they earned in a job.

Patrick McKenzie: If you go back to the mist of prehistory, equity was just this nice thing that companies did that was a kicker on top of your salary.

[Patrick notes: Even in the midst of prehistory, it was used as an inducement to get employees to accept wages which were below prevailing wages for their skill sets. It also essentially functions as a funding mechanism for startups; they essentially lever their equity investments from professional investors with a matching sweat contribution from non-professional investors in return for common equity grants.]

Patrick McKenzie: That has very materially changed over the last couple of years. Many employment agreements are structured such that the majority of compensation will be equity in the success case for the company – and if you're not joining a company expecting them to hit the success case, there's a big question of, “what is anyone in this conversation doing?”

It's become more important – it's become more codified, more, let's say, labor-friendly over the years.

It was a respectable point of view for a while among, say, venture capitalists (who also have equity in the company, which they purchased with money rather than with the sweat of their brow) that it's a good thing when an employee leaves the company and does not successfully exercise their options. That essentially returns that value to all the other equity holders of the company, including the founders, current employees, future employees, and not incidentally, the venture capitalists. And – incredibly to me – people put this in writing on their website, in places where they expected tech employees to read it, and thought tech employees should endorse this perspective.

[Patrick notes: I am subtweeting an essay from a partner at a16z which argues that when ex-employees do not have their vested equity canceled that that dilutes current employees (and other equity holders like e.g. venture capitalists, though this part is unsaid) and, therefore, it is a good thing when ex-employees have their equity canceled. That is a robust and absolutely fair paraphrase of the argument.]

Patrick McKenzie: I'm as capitalist as the day is long. I will probably purchase more months of employee work than I will deliver as an employee over the course of my career by a pretty fair margin – but I view it as a moral failing in the tech industry every time an employee doesn't get the value of their vested equity.

I think that we as an industry should be as scandalized – as this must be fixed immediately, that someone was unable to purchase options because of liquidity issues or similar – as scandalized about that as we would be about, “well, we tried to pay their bank account and there was a hitch in the financial system so I guess they can't make rent this month.”

One of the interesting things about that – the cost of option price, specifically – is that it weighs differently on different sectors of the employee population. People who are older, might have been through the game once before, perhaps had an exit before, have money in the bank account where if you're asked to write a check for $150,000 to secure 80 percent of your compensation for the next couple of years, you say okay and do it.

[Patrick notes: I once had a manager observe offhandedly that "no one could afford their options" given the combination of current strike prices and generous equity grants, and then a bit of awkward silence ensued where people did a multiple-level "Who here has a generous equity grant and who here has a generous equity grant?" calculation followed by a "... Who absolutely could afford to exercise a generous equity grant?" Many forthright conversations about equity, and wealth generally, in the tech industry generate awkward silences. And thus me continuing to harp on this topic at length for a few decades.

For what it's worth: owing to starting my most recent employment with substantially negative net worth on paper as a result of a failed startup, I wasn't able to exercise options on day one. Japan's coffers have benefitted quite a bit from that temporary illiquidity, to put it mildly.]

Patrick McKenzie: There are many people in the world – people who are getting into tech for the first time, people who have just gotten out of college, people who, for whatever reason, do not have $150,000 sitting around – for whom there is a differential degree of pain there.

From a principles perspective, I think virtually everyone in tech would agree: regardless of whether you have $150,000 in your bank account or not, when you get an employee badge, you’re an employee of this company. We should keep commitments to you. That requires companies to do some consequential things that will optimize for some stakeholders, and pessimize for some stakeholders who would prefer to get free money as employees lost their equity. I think we should be enthusiastically in favor of the first point of action.

Anyhow, that is my digression about equity and its social purpose in technology.

Kelsey Piper: Certainly, I think you're right on the nose with it. Over the last decade, there has been more of a movement towards treating vested equity [that way], especially in a company like OpenAI where there are tender offers at which you can turn that equity into cash.

You're often advised to treat equity in very early-stage startups as a lottery ticket because it's going to be a long time before you can turn it into anything. But OpenAI employees, I think most recently a few months ago, got to turn their equity into cash – and since it's an $80 billion company, it's a lot of cash, not just the bulk of their compensation in expectation, but the bulk of their compensation if they immediately cash it out.

Patrick McKenzie: Can we explain for the audience a little bit about tender offers and how they've come to be a thing over the years?

Kelsey Piper: Yeah. So OpenAI as a private company, you own some PPUs – Profit Participation Units, these shares that are given out to employees that are effectively what equity in OpenAI is – and you cannot just sell those on to whoever you want.

(Which is very normal for a private company; it's basically the definition of a private company. They have an interest in who owns it.)

You can't arrange your own secondary market for it – but there is of course interest in it, and usually in late-stage private companies or private companies that are big enough for this to be relevant, what the company will do is host a tender offer: they line up some buyers who will buy from all of the employees, who want to sell because having some cash in the bank now is more valuable to them than the ‘lottery ticket’ at that point.

OpenAI hosts tender offers. I can't tell you how regularly – they're non-regular, and also people are not allowed to share any details of them, including the fact that they occurred – but they host tender offers, and at those, employees and former employees get to sell those profit participation units for millions of dollars.

Patrick McKenzie: I'm familiar with other tender offers that have occurred in the world, and the term “please don't mention this to people outside the circle of trust” is fairly common.

[Patrick notes: It has been publicly announced that my previous employer has from time-to-time instituted tender offers. In addition, I'm a tiny angel investor and have been around the block a few times in the broader industry.]

Patrick McKenzie: One reason it is (kind of annoyingly) common is, descriptively speaking, it has been the case in the past that when tech people do very well on their equity, that is itself a reportable event. It is often reported in a way that companies perceived as being counter to an interest – perhaps embarrassing.

So while it is enormously important within the ecosystem, and the fact of a tender offer will be enormously consequential to the employee base at large, and particularly to people who have been at the company for many years at that point, it's largely kept a little bit quiet.

Another interesting thing about tender offers is they're relatively new. The concept of it goes back for forever, much like the concept of equity, but the dominant way that people got liquidity for equity as employees back in the day was waiting until the company IPO'd.

Because good companies that have produced a lot of value in the world have taken longer and longer to IPO over the years – for a combination of fun structural issues and Sarbanes-Oxley and a bunch of things that we don't have to go into – the previous trade, which is, “accept maybe less salary than you'd be hoping for, plus some equity which might be worth a lot of money in five years” has turned into, “accept a salary which might be less than you were hoping for, plus some equity which might be worth a lot of money in 10 to 12+ years.”

A lot of people who are willing to wait when they're in their first job out of college will have major life changes over the course of their 20s, 30s, 40s, and start thinking things like, “I would like to buy a house. I would like to send my kid to school. They don't accept paper wealth on mortgage applications – can I please turn this into real money?”

So tender offers have gone from something that was quite exceptional to something which I would say, broadly, is no longer exceptional for the most successful companies.

Kelsey Piper: OpenAI certainly qualifies as that. I think it's hard to answer the question of how much they're worth, but you see $80 billion given as an estimate of their valuation.

It's never going to (in a traditional sense) IPO because of the unusual public/private hybrid nonprofit structure, things like that. So tender offers are, I think in many cases, the only plausible way.

(I said they're never going to IPO. They could conceivably IPO, but they have even less pressure to do so than most of these companies – there's certainly no timeframe in which that is anticipated.)

So the tender offers are really it if you would like to sell your equity in the company.

Now, rumors had been circulating for a long time that OpenAI made you sign a restrictive non-disparagement agreement when you left. I had heard that for the first time years ago. But, it was recently, when I started digging into this, that I learned that the way they made you do that – and they don't make you do that, but they applied some pretty aggressive pressure tactics – was by saying, “we will cancel all of your vested equity if you don't sign this.” That was shocking to me.

A company trying to bribe former employees to all sign non-disparagement agreements is not that rare. (I don't really approve of it on a moral level; I think that society benefits when former employees are free to criticize a company, and so when the company pays the employee to shut up, they're both benefiting, but I don't know that that's a contract that the government should necessarily enable – but that that's sort of a different question.)

Patrick McKenzie: A thing that might've happened at many companies over the years is, in the event of layoffs or similar, sometimes severance is in the United States frequently not guaranteed under law, so it's at the discretion of the company whether to offer severance or not.

They might say, “okay, we will give you six months of your old salary as severance in return for a couple of things.” One is a thing you're obligated to do – please give your laptop back – and another is a thing you're not yet obligated to do, but which we're paying you a substantial amount of money for: non-disclosure, don't tell anyone you were involved in a layoff event, etc.

[Patrick notes: A fairly common term in finance, which is not common in tech, causes the phenomenon known as gardening leave. Certain finance industry employers expect employees, particularly senior executives and those in sales positions, to have knowledge and/or relationships they gained "on company time" which could be exploited against the interests of the current employer by the employee effective immediately on their departure. To avoid this, they require employees to not start work in a competitive field (effectively, anything connected to their job) for a period of a year or so after departure. This results in ex-employees twiddling their thumbs and/or working on non-renumerative hobbies, hence "gardening" leave. Crucially, this is a widely held industry expectation and it is priced into negotiations. You get, very literally, paid to do nothing, because doing something is a lot worse for your now-ex-employer than you doing nothing.]

The notion of one's employment terminating with a new contract with one's company is not exactly unprecedented in the world, and I have no particular comments on this contract, but I have thoughts.

Kelsey Piper: I think it is common enough that it wouldn't be remarkable that a company was doing that – and while some people, including me, would be like, “I think that since OpenAI claims to have these big implications, you should be able to criticize them,” I think if this hadn't been about vested equity it would not have caught fire in the way that it did.

But by saying, “we will cancel all your vested equity,” OpenAI was taking a really big step in the opposite direction of this general consensus that your vested equity is your vested equity, that it can’t be canceled for any reason.

A lot of ex-employees who I think would have been like, “thanks for the offer of severance, but I'm a highly paid AI employee, I can get a new job; I will turn down the severance,” felt very differently when it came to losing millions of dollars that they already thought of as theirs, that they had in the bank, that had been part of their compensation for work that they had already done over the last years – so it really hit differently, even though some elements of the practice individually aren't that rare.

Patrick McKenzie: We were discussing this before, and I largely try to keep my full cards on the table: I have no formal relationship with OpenAI. I have many friends and similar who work there, and broadly think highly of them, so I have relatively little commentary to give with respect to them specifically – but a beat I've been on for many years is the informal labor advocate for engineers and other software people.

[Patrick notes: I have <del>cost tech employers several hundred million dollars</del> increased the efficiency of capitalism by convincing technologists to negotiate salary better. That piece is, for better or worse, something of the canonical text for engineers on this issue. 500k+ readers a year for more than a decade; just the ones who email me to say thanks bring the impact into the eight figures per year range.]

Patrick McKenzie: In the capacity as informal labor advocate, it turns out that there are some practices that make one's life a little bit easier when one finds oneself in an adversarial moment in a high-trust relationship. You want to talk about your experience there?

Kelsey Piper: Yeah. I ended up reviewing emails between employees and HR on the topic of this agreement, and you could really tell which employees had some experience with, or had at least done some reading about, how to advocate for themselves in a very adversarial situation – especially because it's painful when a situation is unexpectedly adversarial, when it's in the context of a generally really positive work relationship.

I think a lot of people don't want to start being hard nosed, even if it feels like the other side has started being hard nosed, because you've worked with these people for years. They're your friends. It's kind of difficult.

I reviewed a lot of these emails, and the employees who were most effective at getting documentation of the situation that was actually going on – which later enabled revealing that it was going on, at which point the company apologized and completely changed their practices – were the ex employees who, when the company strongly encouraged them to, “let's have a chat and discuss this over the phone,” were like, “I would prefer to discuss this in an email. Here are my concerns. You can answer them.”

They were the ones who said, “you sent me a contract with these features. I object to the following three, and I don't believe I am obligated to sign a contract with those features. If you send over one without those features, I will sign it right away.”

As a result, they got a very clear paper trail of the company saying, “let's discuss this over the phone,” being shifty in various ways, which was ultimately really helpful to them in getting the company to apologize and reverse the policy. I'm very grateful that some ex employees had the know-how to do that.

Patrick McKenzie: So stepping maybe a little back from the particulars there to the general, “how does one interact with an HR department?”

I have some fairly nuanced thoughts on this.

One: there's an old thought – which is more true than it is false, but is not 100 percent explanatory – that HR exists to protect the company's interests, and it protecting employees’ individual interests is a happy side effect of the mission number one.

I don't think that is entirely explanatory. Without offering comment on this particular, a thing that one sees occasionally with dealing with complex bureaucracies is that people who are relatively low on the totem pole and have a limited set of actions available to them, and who are socialized in a particular fashion, might be choosing from a limited set of actions without intending that to, say, abuse the rights of another person in an arrangement with them.

[Patrick notes: See Seeing like a Bank for examples of this in the financial industry.]

Patrick McKenzie: Descriptively speaking, HR is high up on the org chart, but the typical person tasked with talking to a typical employee is very low on the org chart – and they're following a set of recipes which have been passed down from on high, which might or might not have had the appropriate amount of attention placed on them.

And, descriptively, 90% of the things that HR deals with are, I won't say “not very important” – they seem very important to everyone in the moment, but they're quite routine, like, “how many vacation days am I allowed?” “I'm moving between countries, can you help me with setting up the visa?” “I'm having an interpersonal disagreement – not at the level of a federal court case but at the level of ‘I would prefer this person apologize to me’ – with Tom. Can you mediate my dispute with Tom?”

When one has 90 percent of your day in a high trust environment (the company) where there is institutionally a court-adjacent system inside the company (that's HR), you would strongly prefer to avoid having every possible issue between two employees, or between an employee and the company go to legal resolution, because that would be squandering your advantages of being a high trust environment.

They’re people who are usually in the position of, “my job today is to talk a bunch of employees through complex situations that they don't understand, and which I only partially understand, in this high trust environment, and minimize the amount of time the high trust environment breaks down and we have to bring in actual lawyers over this.”

I feel some amount of empathy for HR people who, without meaning to abuse anyone, do not necessarily give them advice that is great for their own interests.

So that is my somewhat nuanced take on the matter.

I believe I coined a term, Dangerous Professionals, for a set of mien and tactics and being able to portray oneself as being within a particular social class, which causes bureaucracies throughout the world to be more likely to agree with your version of reality in adversarial or semi-adversarial interactions.

Taking notes is one common tactic of Dangerous Professionals. For better or worse, I think in our industry, we have explicitly devalued note-taking. There's been a meme for a couple years in some tech spaces like, “You don't want to always ask a woman in the room to take the notes, because taking notes is a low-value thing, and that excludes them from being able to participate in the high-value conversation.”

I do think you should be mindful of which tasks you assign to which people, but taking notes is not a lo- value thing. Every bureaucracy in the world runs on the paper trail. In an event there is a discrepancy in recollection or a dispute over what happened, the thing that is written down is what happened, point blank.

[Patrick notes: Is history written by the victors, or is writing history victory itself? For Dangerous Professionals, this is a distinction without a difference, since vindication in a legal or bureaucratic context will swiftly follow being the only person to have a presumptively authoritative record of the details under dispute.]

Patrick McKenzie: So, if one is ever in anything which sounds like it’s approaching an adversarial situation, just making sure that you have your version on paper (or electronic paper) is potentially useful.

So interesting question for you: when you were talking to tech employees, are they quite fastidious about what emails come from their personal email and what emails come from their corporate email and similar?

Kelsey Piper: High variance – I think there are people who are very attentive to that, and particularly if they're saying something that they think their ex employer might not like, then I think people tend to be more careful about that (or use Signal).

I think in some cases, people default to not thinking of that distinction as very strong while they’re not in a context where it is – and then if it abruptly is, you know, you sometimes get some problems.

Patrick McKenzie: A thing that very many employers ask of employees – which, on the face of it is a relatively reasonable request – is, “at the point where you leave your employment with us, we would like you to return all of our confidential information.”

That includes anything that was on your laptop – the laptop itself, obviously – and there will be explicit legal code about this in a contract that you have signed. But it is drafted extremely broadly. It will encompass any notes, communications, etc. etc.

A combination of that and the physical reality that, when you leave employment, you will give up your laptop and no longer have access to Slack or Google, means that, if one, leaves employment with outstanding issues hanging over one's head and it is important that one has a paper trail about those outstanding issues, having the company be the only entity in possession of that paper trail is not broadly in one's interests.

[Patrick notes: Note that the outstanding issues may not be between you and your employer and you may not know the issues are outstanding at the point of departure! Taxes are the easiest example, but one could imagine needing information from your corporate inbox in e.g. a divorce proceeding.

A thing I did, which might be useful, was creating a shared folder between work life and private life and papertrailing to my manager "BTW, I have a shared folder for documents I'll need to keep forever; you naturally have no objection to this right." I made a habit of copying e.g. pay stubs into that folder when I received them. And not, you know, Obvious Corporate Secrets.]

Patrick McKenzie: Now, another thing the company will almost certainly say very early in your training and contractual life with the company is, “don't discuss work related things on services which are not controlled by the company” – to prevent, among other things, but descriptively accurately, leaks to the press.

So if one is nearing the end of one's employment and there's a paper trail that is important to oneself (I'm not your lawyer, I'm not an expert on this, take it for what it is), I might, in the course of writing notes to someone – there's this sort of ritual in the tech industry of saying “plus” and then a name, or “+cc” and then a name when you add someone to an email chain – add, “+my personal email (for record keeping).”

Ask your competent employment lawyer what this will do if there is eventually a dispute over it. What I predict they will tell you is that, if there is eventually a dispute of whether you were allowed to do that or not, but HR happily responded to that email and copied your personal email address in, then that's them giving written consent for information to be outside of a work system for a reasonable reason that you gave them and announced.

Combine that with the fact that, again, you're dealing with a relatively low sophistication employee who might not actively be in damage mitigation mode – you can preserve your options for the future if you need options for the future.

I would be a little bit careful about how to send email like that.

I will also say as a former individual who worked for a comms department, very many companies would prefer you to never leak things outside of the company; very many will promise to terminate your employment if you do that, and that is not a priori unreasonable to me.

But I think there are differences that are caused by the nature of labor actions, in particular, and the relationship between employers and employees, that would cause me to counsel very different things about protecting one's ability to to enforce contractually guaranteed rights versus leaking the contents of the all-hands on Friday. (Don't leak the contents of the all-hands on Friday. No one benefits from that.)

That seems like a good segue to another topic. (And apologies for monologuing.)

Kelsey Piper: No, no – hearing you sort of talk through some of these issues is really valuable, and I think people who have heard you talk about this are in a much better position if their employer is doing something that they're not comfortable with, or even [a better position to] notice there's a problem in the first place.

Patrick McKenzie: So the relationship between the tech industry, the firms within the tech industry, and the media whose beat is tech has been a little bit frosty the last couple of years.

Kelsey Piper: Yeah, I would say that. (chuckles)

Patrick McKenzie: Do you have an origin story for that frostiness? I have one queued up, but…

Kelsey Piper: I have some thoughts – if you have one queued up, maybe I'll give my thoughts, because you're more likely to contaminate my take than the other way around.

I think the media is generally in a tragic spiral of the ad market getting worse, and a lot of couplings that were letting journalism ride on the back of stuff that produced a lot of revenue (and was maybe not as high quality) decoupling, and then leaving the journalism without much of a revenue source.

That has, in general, meant that a lot of people don't develop the expertise in doing really good tech journalism that you would like them to have, and that a lot of people who are doing tech journalism have goals for how many stories to write and what kinds of stories to write that make it hard for them to do high quality reporting.

Then I think that has combined oddly with the fact that social media lets everybody get out their own side of the story without going through journalists who used to have a little bit more of a gatekeeper-y role – and which I think is broadly good, like, I think it is a positive development that often companies make statements by posting them on the company's social media account. I think that gatekeeper-y role did a bunch of weird things.

But I do think that in its absence, some mutual dependence where journalists were a way for a company to tell the story of what the company was doing to the public – and also journalists were trying to keep the company accountable – sort of broke down, and now things are frostier and definitely in some respects worse.

Patrick McKenzie: That broadly agrees with my view on things. I think in the mists of pre-history there was this fun synergistic relationship between communications departments – who for various institutional reasons like to control all communication from a company going to journalists – and the journalists themselves.

Partly it was this tit-for-tat game where “we have this message to sell; we also have news which will help you sell copies of the newspaper, and you'll continue to get access to us on the news that is required to sell copies to the newspaper, in return for occasionally not exactly carrying press releases, but being open to talking to us about new consequential things we are doing.”

A less noticed but very real part of this informal agreement was that this company, like many other human institutions, is made out of humans. Occasionally, it will make mistakes. Occasionally, you're going to want to do things like call us up and say, “Hey, I heard you made a mistake. Probably you don't want to be identified personally as saying this, but can you confirm to me that this mistake actually happened?”

And as part of this sort of game of ongoing relationships, the company might say, “Okay, off the record for the purposes of your fact checking, yes, that division did do that thing. We are not in favor of it, we will fix it, and then here is a statement (which obviously because of incentives we're going to write in a way which is most favorable to our view of reality) – but the fourth estate, two thumbs up, you are getting the story correct on this one.”

That game continued for a while. If there were one moment that really shook the tech industry's confidence that the old regime was being respected by both sides, I think I would point at Cambridge Analytica.

My view on Cambridge Analytica, and this is getting increasingly in the past at this point, but during the the 2020 election, I believe the media and the security state mutually convinced each other that the US election had been corrupted by – the technical details are a little bit out of scope, but you can round it to $200,000 of ad buys.

And… that didn't happen in the same world we live in. (There are many people in tech who can give you arbitrarily detailed explanations of, “hey, we're pretty good at this whole buying and selling advertising thing, and we can explain advertising.”)

It's just, no, this narrative is made up. And people expected, like, “oh, well, we're going to have a bunch of off the record conversations about, ‘no, this narrative's made up, maybe stop pushing it.’”

[Patrick notes: I hate being political, particularly in an election year, but the core accusation against Cambridge Analytica—that they downloaded the entire social graph of U.S. voters through malfeasance—was both a) practically a Facebook platform tech demo for a few years and b) frequently cited as an example of Wow The Obama Digital Team Really Gets It by members of the Obama digital team who really got this. They gave conference presentations on why having the social graph of likely voters was useful and how they had successfully recreated it. Also, props for epistemic rigor to Carol Davidsen in particular, who during the Cambridge Analytica fracas and against her professional interests was extremely explicit about this.]

Patrick McKenzie: But the stopping-pushing-it – whether off the record conversations happened or not, who could possibly speculate? – did the stopping-pushing-it happen? No.

For quite a while – and there were high tempers on all sides of the U. S. presidential election, what's new? – a combination of shock and outrage about the results of the election, plus looking for a scapegoat, plus perhaps a little bit of results-oriented thinking with respect to organizations that the media felt it had an antagonistic relationship with, resulted in them laying the door of the election on tech companies specifically with this minor consultancy in England or whatever being a gateway for that.

So that is my brief retelling of the last bit of time.

Since then, the institutional culture of media has become perhaps a little bit more antagonistic to large concentrations of power in tech – and I think it is important to acknowledge tech has a lot of power in society. We have many smart people who are spending supermajorities of their effort on causing physical results in the world, and that is a recipe for getting physical results in the world. That is important to the society we're in. (If tech isn't, descriptively speaking, powerful and impactful on society, what are we doing with our lives?) (both laugh)

[Patrick notes: Tech has an uneasy relationship with acknowledging out loud that it has and/or wants power. So do people within it. I have a self-conception heavily informed by being a nobody next to a Japanese rice paddy selling bingo cards to elementary schoolteachers over the Internet. And then one day I found myself running the United States' vaccine location information infrastructure.

Tech has shipped many things much more impactful than that. It is trivially observable in real life that the iPhone matters more than the identity of the U.S. president does. Nobody takes the president into the bathroom with them. (... We must earnestly hope.)

We are reshaping the world in our image, by being really f-ing useful to it. People who are more naturally status-oriented than us assume that anyone who achieves this level of impact must naturally have sought it for self-aggrandization purposes and that denial of this is simply additional evidence. They fail to properly model tech minds, I think, but I think they're not wrong about realized level of impact.]

Patrick McKenzie: So broadly speaking, I can understand to some extent, the media (sometimes in a little self-flattery way) has a somewhat oppositional or watchful stance on centers of power.

For many years, PR departments, oh, we groan when we take our lumps on places where we deserve it to, because taking lumps is never fun, then we groan a little more taking lumps where we feel like we don't deserve it, but this is the nature of the game; then the last couple of years…

Many people in tech feel like the game is not being played anymore. There is no more tit for tat. There are a variety of well respected institutions in the United States that have “this institution defects in games with tech companies” printed on the masthead.

I think I wrote that line before I saw a tweet of yours about the New York Times. Do you want to catch people up on that tweet?

[Patrick notes: The now-deleted context of this tweet is that Matt Yglesias, an influential blue-leaning political pundit, mentioned that the New York Times specifically had a top-down narrative direction to be intrinsically hostile to tech companies in reporting on the industry. This was not news to the tech industry, to put it mildly, but Matt Yglesias is the kind of person that reporters cannot trivially dismiss. And so this tweet caused a cascade of common knowledge: everyone now knows that everyone knows that the Grey Lady, avatar and metonym for journalism, has an official policy to slant their tech coverage.]

Kelsey Piper: So, I had talked to some people who had left the New York Times recently, a couple of different people.

With former employees, there's always the, “is this a disgruntled employee” hypothesis, but they were for the most part fairly serious people whose judgment I respected.

They felt that they had gotten sort of a message about the New York Times’ goals in its tech coverage, which was like, “we're going to hold tech companies to account, and your aim with your tech reporting is to find things that need holding to account and do that.”

This is a lot more editorial lens on the content of coverage than is typical. The New York Times has been reported for a long time to be more top-down than a lot of other outlets in these ways, but to me, the amount of lens that they described crossed lines journalistically. I think a lot of people in tech have this feeling that they've stopped trying to report fairly.

Patrick McKenzie: I've joked that they don't hire people in tech comms departments for any combination of illiteracy, being unable to perceive social signals, or not being able to talk to journalists off the record.

So this was – it wasn't a secret, per se, in a way that lots of things are not true secrets, but it was not an acknowledgement of New York Times editorial policy – until one day Matt Yglesias just blurted it out on Twitter.

Kelsey Piper: Yeah, and when I saw Matt Yglesias blurt it out on Twitter I think some people were kind of incredulous because, you know, “the New York Times, very respectable newspaper,” which to be clear, does do great reporting in some areas. There are a lot of people at The Times who I think incredibly highly of, and there are people at The Times who have told me that they have not experienced editorial pressure and that they would be outraged if they did.

[Patrick notes: The gray lady doth protest too much, methinks.]

Kelsey Piper: But I think that in some departments, in some contexts, there was certainly an understanding that The Lens was going to be holding tech to account instead of, you know, a broader and more neutral sort of lens on what the purpose of tech reporting was.

Patrick McKenzie: The lens that they would bring to, say, White House reporting, where there's some amount of government malfeasance every day, and you want to cover government malfeasance; and there's some amount of, “the government is doing important work every day,” and you want to cover important work; and there's some amount of human interest, and maybe being sympathetic to individuals in it, yadda yadda yadda – which disappeared from tech reporting for a couple years.

Kelsey Piper: I think a lot of people felt its absence, and found it explanatory when they heard that had been to some degree a policy. It’s a policy that I think has had really damaging effects, because – going back to the OpenAI story – I sent an email before I went ahead with the piece.

Some reporters will hide the ball a little bit and just say, “Hey, I have some reporting, can we talk?” – I was like, “I have reporting that employees are told their vested equity will be canceled if they don't sign a secret non-disparagement agreement. Can we talk about this? Can I see the agreement?” (I wasn't expecting them to agree to that, but it would have been useful for my fact checking.)

They didn't respond at all. Just totally radio silence.

I do think that five years ago, probably I’d have been more likely to get an off the record comment – and plausibly, for the company, I think it would have been to their benefit to have corrected this policy before it even hit the news.

And so maybe everybody would have won in a world where those relationships were a little stronger, and where the default press reaction to a negative story wasn't, “we will simply ignore them and hope that they go away.”

Patrick McKenzie: There’s a thing which has been known to happen in Media Land in which is, you know, this is a tit-for-tat game between organizations that understand that they are mutually synergistic:

A long time ago in a place far far away, well before I worked in any formal capacity, I did informal advocacy on behalf of people who were experiencing issues with their banks or debt collectors or similar.

A way since time immemorial to fix the symptom, “a grandmother in diminished socioeconomic circumstances in Kansas has been improperly foreclosed upon by the bank,” is to call up the bank's PR department and say you're the local newspaper or you're going to send a letter to the local newspaper about a very sympathetic person getting foreclosed upon, does the bank have comment?

A very common way that this has worked out historically is that the bank will say, “can you please hold off on this for a week or two while we get our ducks in a row?”

Everyone understands that it would be improper for you to ask them to kill the story. But it's within the rules of the game to say, “hold off on this while we get our ducks in a row and we will cancel the foreclosure,” which is the physical result that everyone wants – “and then we will take our lump for having done this,” but there will be a statement there, you know, “we had a mix up in procedures, we will do better in the future, and we have already fixed this for Ms. Mildred, who was perfectly on time with all her payments.”

And yeah, enthusiasm for that sort of thing has declined in recent years because the people not just no longer make commitments – to have a bit of empathy for the place tech journalists find themselves in, the pace at which news has been produced is so much faster than it previously was.

There was a time and place in which asking for a story to be delayed for two weeks is per se reasonable. I don't know your opinion on it, but if I was asking for something where I had a deadline, a two week delay from someone who's only peripherally involved in the production function would be a bit of a hard sell.

Kelsey Piper: Yeah. I think certainly, if it's a fast moving story, or if you're worried that other outlets are also on the trail, or if your sources are sort of going, “I might just go public independently” – all of those pressure against saying, “sure, you can have two weeks.” I do think you could still get some time that way, but I think that, like, lost social technology – once that doesn't usually work, then it's maybe not even something the press thinks of.

Patrick McKenzie: In the currently antagonistic relationship, some people working in PR departments might reasonably understand that if you ask someone for the old tit-for-tat game, the fact of you asking for the old tit-for-tat game will be reported in a way designed to embarrass you.

I like that phrase, “lost social technology” – we have mutually lost a bit of mutual grace that was deployed to the broader benefit of society, which sounds a little bit sad and rhymes with a few other places in society where grace and slack have been consumed by other institutional factors.

Kelsey Piper: So, making this a little concrete for me: in 2010, Patrick hears from a Vox journalist, who's like, “I heard something bad about the company. Can you confirm?” And then a fact that, yeah, in fact, sounds kind of bad. How does 2010, Patrick respond?

Patrick McKenzie: Well, in 2010 I would've been, ending my job as a Japanese salaryman, so slightly different norms, but hypothetically counterfactual Patrick works in the AppAmaGooBookSoft comms department and has what Actual Patrick believes are modal points of view and modal amounts of internal efficacy with regards to this.

There was this thing that happened, and maybe it's at the level of a particular account of which we have millions of accounts. The first answer is going to be something pretty anodyne – “we respect our users’ privacy very much. It's a huge priority for the company. We've put millions of dollars of effort into it this year. Blah, blah, blah. Do you have any questions?”

And then the reporter might get back with, “but what about Robert?” And then you say, “Okay, off the record now, yes, Robert's account was deactivated,” or “yes, Robert's account was attacked by hackers. We will do what we can to prevent this in the future,” and – maybe likely in some shops, maybe likely not – a specific thing that we are committing to doing (off the record for the moment, but maybe we could circle back in a couple of weeks) is, “we are limiting the number of employees with access to this information.”

“Here’s a statement that we would like to go in this article.” And so there would be some balancing of the interests there.

If one flashes forward to a hypothetical Google press person in 2024, there's very little incentive to play this game anymore.

Plausibly, there's a big red button inside the PR office for escalating things to outside the usual customer service tree. So plausibly you hit the big red button with regards to the user that was inconvenienced and run it up the flagpole, but you won't even tell the reporter that you're running it up the flagpole, because there's just no upside anymore.

[Patrick notes: For more on so-called escalations in e.g. financial companies see here. PR is one of many teams which has this big red button available. So does e.g. Investor Relations, the Office of the CEO/President, Legal, etc.]

You probably won't try to engage in this, “Okay, can I trade you here? We'll do some concrete stuff here – I’ll admit to this now with a semi-exculpatory statement and then maybe in a couple of weeks, you report the thing that we did that is actually pro-social and good for the world.” Advancing that trade is just asking for more pain for yourself.

Can I give you a concrete example of this?

Kelsey Piper: Yeah.

Patrick McKenzie: So if hypothetically somebody is working at a tech company, you might hypothetically get emails from reporters that start like, “Hey, I see you work at tech company blah, blah, blah because I follow you on Twitter. I really like your thoughts on blah, blah, blah and I noticed that the company recently did blah, blah, blah. Do you have any thoughts to share with me?”

Where it might be phrased in such a way that the employee’s like, “Wow, I'm. I'm impressed that someone likes what I am doing and wants to tell our important message. I will respond to this.”

And then the actual story that gets written is cast in a fashion where anyone who made the mistake of responding to this will be mocked and embarrassed.

I'm thinking of one email in particular, which was I won't call out the particular publication at issue, but I did not respond to it. A number of people did, and then they got called unkind names in the next day's edition.

It was just like, “This is one reason we tell employees never respond to reporters unless you're in the comms department” – which, excellent reasons for that policy from the perspective of comms departments, by the way. (Unclear to me whether it is a policy that society should want companies to tell their employees. But complexities, complexities.)

So if you mind turning back to the clock for a little while, the part I started following your reporting was early in 2021. because you wrote about a fun little side project I was doing at the time called VaccinateCA. You mentioned you had written in future Perfect About pandemics before, and early 2020 and then early 2021 were kind of a tumultuous time. Can you reflect on some of the stuff that happened there?

Kelsey Piper: Yeah, so my first COVID story was February 4th or so, 2020. It holds up okay, but it was kind of like, “everybody's assuring you there's definitely no big deal, but even a small probability of a big deal would be a really big deal, so I'm not sleeping easy just yet” – which I wouldn't say was the most correct take but at least it left doors open to be correct later.

Then we did a bunch of reporting – Future Perfect was sort of on a different page from the rest of Vox for some of this.

[Patrick notes: The Japanese salaryman in me appreciates Kelsey's delicate understatement here.

"Is coronavirus going to be a deadly pandemic? No.", tweeted Vox, which deleted the tweet after they had beclowned themselves. They also published a piece mocking the tech industry for being hypochondriacs unwilling to listen to public health experts. The piece also implies cryptoracism as a motivation. ("These examples show there’s a fine line between general concern about the virus and targeted xenophobia toward Asian immigrants and Asian Americans.")

I presumably do not need to remind you how that story turned out. This aside is, incredibly to me, considered almost unfair in the culture that is journalism. How dare someone say we should take a status hit for being wrong? How could we have possibly known how things were going to turn out? We accurately reported was was told to us by credentialed experts. Don't you understand that reporters don't write the tweets or headings? We didn't actually call you racist (this time).]

Kelsey Piper: We did a bunch of reporting on questions like, “How is the virus primarily transmitted? Is it likely that there are more cases in the US than are being reported, given that it is illegal to test? Does it make any sense to close beaches and public parks, given that there is basically no outdoor transmission of this virus ever?”

Good questions like that, which we all had intense opinions on in 2020 and never thought about since.

Then the vaccines happened, and I think for a lot of us who were pretty persuaded by the evidence that the vaccines worked really well against the strains at the time, that was the light at the end of the tunnel – you know, we could go back to partying.

My family found early availability at a location an hour from where we lived, made our appointments, and then put a party on the calendar for us and 50 of our closest friends three weeks after the fact. And we had the party, it was amazing!

Around that time, we were running into the fundamental limitation of the US government that it's really, really bad at building software on a deadline for anything important. The state of California – and all of the other states, but the state of California was most salient because I lived here – had a resource for helping you try and find the nearest available vaccine, but it was really bad. It was not very usable. It was totally unhelpful if there was anything about your case that was unusual in any way.

I think it basically involved having to call tons of pharmacies yourself, which meant that all of the pharmacies were being constantly bombarded by a billion calls and then not able to do their primary job with prescribing necessary medication to people.

[Patrick notes: Kesley was the first reporter to cover VaccinateCA, which is described below. For much more detail, see the writeup I did for Works in Progress.]

Patrick McKenzie: My apologies for being a little bit pedantic on the timeline here, but I have this one seared into my memory due to professional events.

MyTurn – which was I think the resource you're referring to, a state of California initiative – debuted in approximately March of 2021.

The first doses of the vaccine were delivered in early December, and then my recollection is, as of mid-January, California was reporting to the United States federal government that it was successfully administering 25 percent of the doses that it received, a number which was extremely concerning to me and which placed it 48th or so in the nation in terms of efficacy.

[Patrick notes: It was little noticed that California was reporting this to the U.S. federal government until Bloomberg put it on a webpage, at which point the California governor made not being embarrassed by that web page a top priority for the state of California. And so let this be a lesson to you: sometimes embarrassing the government is extremely instrumentally useful, because avoiding embarrassment sometimes causes more urgency than merely avoiding people dying.]

Patrick McKenzie: I subsequently heard from people in California that it was possible that the fact was not as bad as that number made it out to be, because it was possible that the state of California was, in addition to being bad at administering the vaccine, bad at counting and possibly it was an undercount.

And I said, do you think that undercount is, “we're actually administering 100% or 95%, but counted down to 25 because counting isn’t important right now”? And they're like, “oh no, it's nowhere near that.” I'm like, “okay, the competence issue is very genuine.”

In January of 2021, I tweeted out something after reading about 10 times in the newspaper – I say newspaper, various online publications, I’m living in central Japan at the moment – that it seems crazy that people are calling all across the state of California to 20, 40, 60, different doctor’s offices, hospitals, pharmacies, to find one place that has the shot available for something which, based on the agreed upon prioritization scheme, they are immediately eligible for.

I think, the state of California plausibly has at least one person in it capable of making a website where you could centralize this information. Since that has not been done yet, maybe people should do CivTech, civil technology, a quick project to centralize this information, publish it to the internet, and then keep doing that until no one needs to read that webpage anymore.

Then I thought, that's a pretty weak tweet by itself, and I replied to it saying, “and if you're worried about your server costs crushing you, send me the bill, I'll take care of it.”

Karl Yang followed me on Twitter and was like, “That's something I could actually do.”

He opened up a Discord server – which is normally used for playing video games together and killing dragons and whatnot – invited ten of his closest friends from the tech industry, and said, “We're going to OSS the availability of vaccines in California by, it's currently 10 PM – we think we can get this done by 9 AM. Let's go.”

So he posted that as a reply and I lurked in. I was like, “I’ve designed some systems involving calling large numbers of organizations before, I have some relevant expertise. I'll give you that, and then I’ll tweet about it when it launches, and then I'll be on my way.”

Then one thing led to another and three days into it, the servers were not quite melting. I think we were getting 60,000+ people a day visiting. We were starting to get reports of end-to-end success with the model, where we had called the pharmacy, put the information on the website, people discovered that information from the website, successfully got an appointment at the pharmacy, and then their parents – typically in the case of who was eligible at that time – were successfully vaccinated.

And so on day five, I went to the team and said, “We were all just kind of doing this as a quick over the weekend hackathon, and most of us were expecting to go back to work on Monday.

“We shouldn't. We should be all anonymous for as long as necessary. There needs to be an organization here. Some of us need salaries, so there needs to be some money found, etc., etc. – I'm looking around the room, and there doesn't seem to be an executive here. That's unfortunate; I nominate me. I will figure out the money thing, figure out the entity thing, and run this until I can find a better person to hand it off to.”

The initial team of coordinators agreed on day six or seven that I would run it, and then we were kind of off to the races.

Interestingly, from the perspective of common strategies and the funny way that sometimes maximal transparency is in society's interest and sometimes not, the picture I gave everyone on day seven was, currently we are doing this for California because it's where all of you live, and it's quite near and dear to my heart, and – path dependence is a real thing – it happened to be that I read a lot more news about California than I do about, say, Maryland. So I had quote tweeted a tweet about California and not Maryland, otherwise, I might have run, like, VaccinateMaryland.

But I told people that if the model works in California – and we have early persuasive evidence that the model works in California – it is extremely likely that this model works across the United States.

We will do this across the entire United States, I will promise you, success for this only looks like rolling it out across the United States. We should not tell anyone this fact, because at the moment, we're a bunch of plucky do-gooders who are overachieving relative to one’s prior of zero impact at all with respect to California.

But if we announce our intention to move it to the entire United States, we have a tiny bit of work done in California and a 98 percent failure rate for all the other states in the nation. We can only announce the national site when we're ready for it.

A fun thing about navigating the PR career is, I had – continue to have – a strong prior in the direction of transparencies and unalloyed good, yada, yada, yada, and I've come to a somewhat more nuanced perspective over the years.

Sometimes organizations keep secrets for a reason, and it's good to keep the secrets – sometimes for bluntly strategic reasons, but prosocial strategic reasons, you don't want to tell people all of the truth that you know.

That was a hard sell for me, and it's a hard sell for a lot of people. So here I am articulating it to you. There are times where people who broadly consider themselves ethical and who you know, kiss their children to bed every night, will intentionally not tell all the things that they know to be true and/or say things that –

Did I ever lie about it? I don't remember if I lied about it. I would have lied about it though – there were lives on the line. Lying is less bad than killing people.

Anyhow, that was the first part of the VaccinateCA experience, and we eventually built it to a point where it was essentially the public-private clearinghouse for vaccine information. Folks were googling for the availability of vaccines – they were (somewhat incredibly) not getting it from the United States federal government, but were getting it from the plucky band of geeks who were still organizing on Discord. (Okay, that sounds a little bit self indulgent.)

Kelsey Piper: No, I really love stories like this on a bunch of levels.

One is that I feel like a lot of the most important decisions in my life in retrospect are ones where I was like, “I'm not full time on this, I could be full time on this – I'm gonna go full time on this” and hearing you describe that… I feel like a lot of people just don't have that affordance.

Sometimes literally don't have that affordance because their regular job won't let them get away with that, but it really does seem to me like an unusual share of the value in a person's life can happen at a moment where they're like, “I'm not full time on this and I could be” – it's really, really great to have examples of that.

Patrick McKenzie: I remember telling my manager and senior executive at Stripe: “the thing I'm expected to do on Monday is to copy edit a blog post on our strategy for doing high-volume testing of transactions during times like Black Friday – which is important for a payment processing company – or I could continue working on the thing that is going to get vastly accelerated vaccine availability in the state of California. You should direct me to do the second and not the first” – and, props for people with decision-making authority there, they made what I view as the major correct decision.

In a different world maybe I would have made different decisions, but we went down the happy path.

I think there's an interesting and under commented EA-adjacent bit here where,

I wrote a memo – I do a lot of my decision-making on memos, and came up in a professional culture like that – I wrote a memo to the other coordinators, the punchline essentially, “we should intensify this from weekend project level of effort to, no, this is the organization that understands itself to be the United States’ response to COVID infrastructure – and then take actions consistent with being that organization; professionalize immediately, raise money, we're all on full time, let's go. This org probably needs a CEO – lacking a better alternative, you should probably make that me and give me carte blanche to go negotiate with people to get the money, et cetera.”

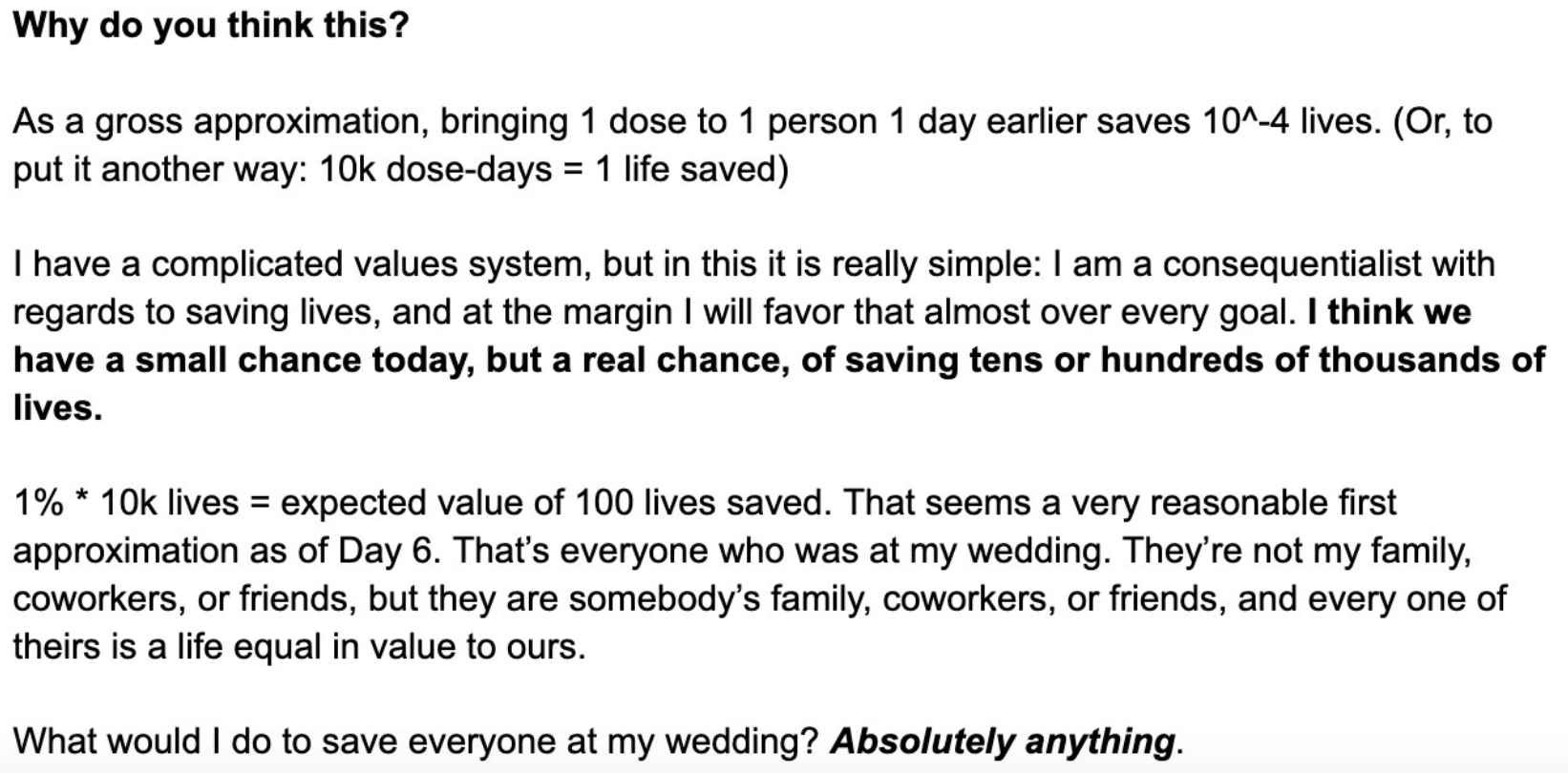

One of the lines in that was an expected value calculation: I said – I was quoting from memory from 2021 – but I said, “on the basis of the evidence in front of us right now, we think you need 10,000 dose-days of acceleration of the vaccine to save one life in expectation. The plausible path of execution for this buys a sufficient number of dose days such that this project saves 10,000 lives.

“If we think we even have a 1 percent chance of that right now, that's 100 lives in expectation. 100 lives is everyone who is at my wedding.

“What would I do to save the life of everyone at my wedding? Absolutely anything. Therefore, we should do absolutely anything.”

[Patrick notes:

A screenshot of the relevant portion of the memo:

I'd ballpark the number of lives we actually ended up saving at somewhere between 2,000 and 8,000; it's heavily sensitive to assumptions about Google user behavior that they're extremely cagey about and the changing demographic (principally, age) mix of shot recipients in the early months of 2021. Still, I've never been as proud of underperforming a projected success case than this.]

Patrick McKenzie: That value system clicked with a number of people around the project. I know one or two of the volunteers were EAs; I don't know if any of the other coordinators would consider themselves EAs, but I consider popularizing that value system to be a good thing that EA has done for the world.

EA has taken some hits to the chin with regards to reputation in the last couple of years. Sam Bankman-Fried was quite affiliated with them in all possible ways to be affiliated, and turns out to have had some ethical challenges, to put it mildly.

After they were taking those hits, I said, to correct the record just a tiny bit – in the knowledge that ‘outing oneself’ as an EA right now is not the most popular thing – “I don't know whether I'm a member of this community, but you influenced me in a way where you are the but-for cause for this thing that probably saved a couple thousand lives.” I am indebted to them for that.

Why is it that this cluster around us of internet weirdo intellectuals – rationalists, EAs, the lines blur a a little bit – what's your theory for, for why this has had as much impact as it has, to the extent it's had impact (positive or negative)?

Kelsey Piper: Man, that's a good question. I do think that there are some fairly simple ideas that when given to smart, ambitious people can let them do a lot more than they would have otherwise.

I kind of think of this as being one of the animating facts about Silicon Valley: you hear this from YC people, like “half of what we do is just say, ‘have you considered being more ambitious?’ to people.” It seems like being around people who model ambition and model dropping everything else to go full time on something, and where that's normal enough that it's possible that you at least have the thought cross your mind – like, should I do that? – just seems like a really powerful bit of leverage.

It seems very easy for a smart, ambitious person who cares a lot about the effects their actions have on the world to mostly not do things that have big effects on the world, just for lack of affordance and lack of an example – so it seems quite powerful just to be like, “Here’s the affordance. Here's an example.”

Sometimes that's, “Okay, we're going to build the COVID infrastructure. We can probably build it. No one else can.” The internet obviously is a huge multiplier on how much smart, ambitious people can accomplish.

(I guess sometimes it's, “we can steal $8 billion and nobody else can,” but…)

Patrick McKenzie: I think many of us grew up with a script, you know, “wait, wait, wait, pay your dues, climb institutions, etc. and eventually you will be in a place with power and authority and be able to bend power and authority to just and socially productive ends.” I think one real strength of the Internet is that you don't need a swearing-in ceremony or a long and laborious process of achieving buy-in from people to get a great deal of very tightly-constrained power.

You can publish anything you want to one page on the Internet; that's your de facto right, and no one's going to try to take it away from you. What can you build given that?

And you don't have to get much permission at all to do it.

That was interestingly one of the early questions at VaccinateCA: do we need permission from the state government to show where the vaccines are distributed? Which is a reasonable question; many of our volunteers were young engineers.

I said, “I know a few things about a few things, and one thing I know is that in the United States, there's this thing called the First Amendment, which guarantees freedom of the press – which is actually much broader than the press traditionally described.”

[Patrick notes: Kelsey audibly and visibly agrees enthusiastically at this point.]

Patrick McKenzie: People were worried about getting in trouble, and I'm like, “I predict, at very high confidence, that it is almost impossible to get in trouble in the United States for publishing true things.” [That] generated just enough incremental confidence in people to continue publishing a true thing which we thought reasonably might embarrass the state of California.

To be fair, embarrassing the state of California for only successfully injecting one dose when it was delivered four would be a just outcome, particularly when bent towards the end of “let's inject the other three as quickly as possible.” If embarrassment gets that faster, let's embarrass them. If embarrassing them causes it to be delayed by a day, then…

Consequentialism. It's helpful!

Kelsey Piper: When trying to get stuff done, I think it's really, really valuable to – when you're considering alliances and embarrassing people and stuff – to have that return to focus: does it make the thing happen faster? Great, we're doing it. Does it not make the thing happen faster? Great, we're avoiding it.

Patrick McKenzie: We were kind of religious on that, but like, “we'll give anyone the credit for this if necessary.”

It ended up being the case that the federal government, Biden administration, etc. grabbed a lot of credit for a website called vaccines.gov, which was itself run by another non-profit out of Boston Children's Hospital, the Vaccine Finder initiative.